There has been so much fuzz and information, opinions and predictions about ChatGPT since it launch (30. 11. 2022) , that we cannot fit it into standard DSB issue. This page (that will be from time to time updated) is our single point of sources about ChatGPT, so we can focus on (as of now) more industry-usable techniques.

The model / Analytical insights

ChatGPT (sometimes called GPT3.5) is a large language model made for task of text generation (GPT is abbreviation for Generative Pre-Trained Transformer). The number is “versioning” of a model, higher number means more parameters in model (and more time/energy/resources for training needed). GPT4 is probably in the making.

Best place where to start is the official page with high-level overview. The core innovation is technique called “Reinforcement Learning from Human Feedback” and coming from InstructGPT and well-written paper. And probably started in the paper “Learning to summarize from human feedback” in 2020, which explores the human feedback impact on quality of model output.

Last key paper used for ChatGPT is “Scaling Laws for Reward Model Overoptimization” that improves on InstructGPT reward model for reinforcement learning methods involved.

There are many more important papres and references, we picked up the ones we think are the most important entrypoints.

Method description elsewhere

Reading papers can be cumbersome, so in this section we put up a list of decent – great articles, that describe the used methods and give insights into (Chat)GPT.

https://www.surgehq.ai/blog/introduction-to-reinforcement-learning-with-human-feedback-rlhf-series-part-1 – Great introduction into what the method of RLHF is and how it improves the GPT3 to ChatGPT. Approachable article full of examples.

https://huggingface.co/blog/rlhf – Hugging Face overview of ChatGPT method. This ones dive a level deeper then SurgeHQ, describing model and methods in detail. “Further reading” section is full of references extending our section with papers impacted the ChatGPT and RLFH area in general.

IOHO (In our humble opinion) the implementing stuff and playing around with the model itself is best teacher. ChatGPT is still waiting for open-source implementation (some predict there will be many of them this year), but educational GPT implementations exist. The best is from Andrey Karpathy: minGPT (more for education purposes) and nanoGPT (actually usable for usage). Go ahead and explore them, he does incredible job in explaining ML stuff!

https://github.com/BlinkDL/ChatRWKV – First try we stumbled upon that tries to mimic ChatGPT capabilities with different network architecture. Different architectures, effective training and effective inference are going to be hot areas of research this year.

Competitors

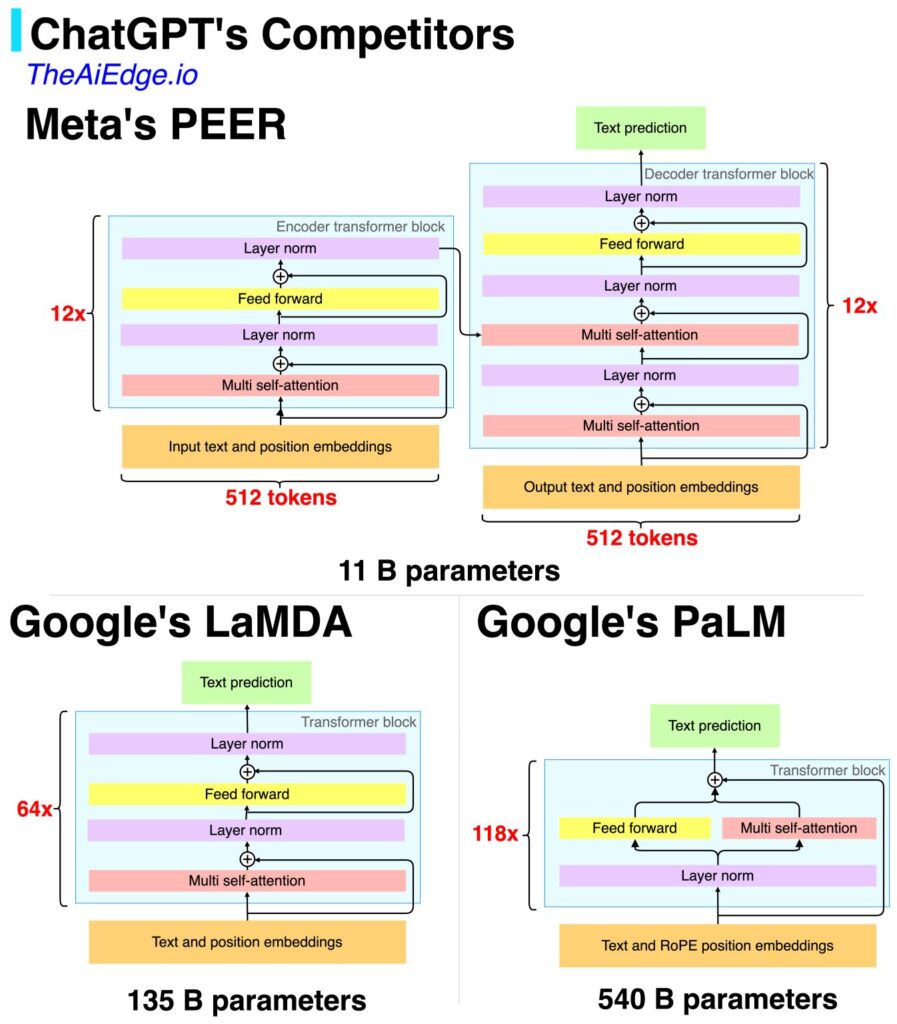

ChatGPT is far ahead in PR, but it is not the only competitor in the generative models and LLM. Here is a comparison of architecture and parameters numbers of Meta’s PEER and Google’s LaMDA and PaLM.

LaMDA (Google): this one made some fuzz around the nets. Decent arxiv origin, original blog with interesting amount of focus on responsible AI and then this BBC article that LaMDA is sentient. This is also LLM mentioned most in the context of ChatGPT competition. But since it was not presented public, we cannot know or estimate how these two compare.

PaLM (Google): Here google went for all the resources it could and the PaLM is 5x larger in amount of parameters than GPT3. Original paper and following blog post. It is also the first time we noticed Model Cards idea from Google.

Galactica (Meta): Public attempt of Meta to introduce cool LLM. It went horribly. Original paper and sadly we could not find the original page about it on Meta AI pages.

PEER (Meta): original arxiv paper, sadly they did not share any write up.

Opinions and News

We are yet to find AI expert, that does not shared opinion about ChatGPT. We handpicked the interesting ones (the december and january was basically flood of articles and opinions etc.) and put them into chronological order:

- 9. 12. 2022 Why Google missed ChatGPT: https://www.bigtechnology.com/p/why-google-missed-chatgpt (Spoilers: they are careful, not behind)

- 9. 12. 2022 WIRED take on issues LLM have and correctly criticize lack of effort in fixing them: https://www.wired.com/story/large-language-models-critique/

- 21. 12. 2022 Code red in Google: https://finance.yahoo.com/news/googles-management-reportedly-issued-code-190131705.html

- 10. 1. 2023 Microsoft invests 10 bilion $ dollars into OpenAI: https://www.cnbc.com/2023/01/10/microsoft-to-invest-10-billion-in-chatgpt-creator-openai-report-says.html

- 16. 1. 2023 Azure provides OpenAI models (not ChatGPT yet) as a Service: https://azure.microsoft.com/en-us/blog/general-availability-of-azure-openai-service-expands-access-to-large-advanced-ai-models-with-added-enterprise-benefits

- 17. 1. 2023 News about GPTZero, detection of generated text: https://www.npr.org/sections/money/2023/01/17/1149206188/this-22-year-old-is-trying-to-save-us-from-chatgpt-before-it-changes-writing-for

- 23. 1. 2023 Yann LeCun comments on ChatGPT: https://www.zdnet.com/article/chatgpt-is-not-particularly-innovative-and-nothing-revolutionary-says-metas-chief-ai-scientist/

- 27. 1. 2023 https://www.washingtonpost.com/technology/2023/01/27/chatgpt-google-meta/

- 1. 2. 2023 Microsoft integrates ChatGPT inside MS Teams: https://www.microsoft.com/en-us/microsoft-365/blog/2023/02/01/microsoft-teams-premium-cut-costs-and-add-ai-powered-productivity/

- 1. 2. 2023 OpenAI introduces paid access to ChatGPT (called ChatGPT Plus): https://searchengineland.com/chatgpt-plus-launches-392558

- 6. 2. 2023 Google introduces their Champion based on LaMDA: https://blog.google/technology/ai/bard-google-ai-search-updates/

- 7. 2. 2023 MS moving ChatGPT into Bing and Edge: https://www.theverge.com/2023/2/7/23587454/microsoft-bing-edge-chatgpt-ai

- 8. 2. 2023 First critique of Bard, nothing but same old issues all LLM suffer today: https://www.theverge.com/2023/2/8/23590864/google-ai-chatbot-bard-mistake-error-exoplanet-demo

- 13. 2. 2023 ChatGPT also makes mistakes during official demo: https://dkb.blog/p/bing-ai-cant-be-trusted

If you think we have missed interesting article or source on ChatGPT, please write us a comment. Or use the standard recommendation form.

Be First to Comment